Interactive Visual Exploration

January 2009 - October 2009

Course: PhD project

Software: C# and C++

Description

Experiential image retrieval systems aim to provide the user with a

natural and intuitive search experience. The goal is to empower the

user to navigate large collections based on his own needs and

preferences, while simultaneously providing her with an accurate sense

of what the database has to offer. In this project, a novel interface

gives the user the opportunity to visually and interactively explore

the feature space around relevant images and to focus the search on

only those regions in feature space that are relevant. In addition, we

give the user to opportunity to perform deep exploration, which

facilitates transparent navigation to promising regions of feature

space that would normally remain unreachable.

Exploring feature space

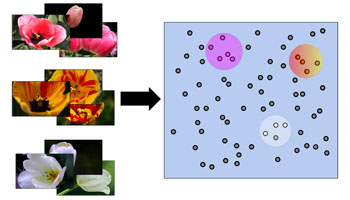

In low-level feature space images of interest can be spread out over

multiple areas. For example in a feature space built up on color

features, it is likely that images of differently colored tulips can be

found in several parts of the space, see Figure 1. Such a search can be

performed using multiple query points, but exploring the feature space

around each query point is often a slow process.

Figure 1. Depending on the features used, images of the same category may end up in several parts of feature space.

Most interfaces only present a limited number of images to the user, putting a heavy burden on the user, because navigating the space around each query point requires many iterations of feedback. To reduce user effort and allow efficient refinement of the relevant search space, we propose a novel technique whereby the user can visually and interactively explore the feature space around an image and transparently navigate from one area in feature space to another to discover more relevant images.

Interactive visualization

In retrieval systems based on relevance feedback, the user indicates

his preferences regarding the presented results by selecting images as

positive and negative examples. Subsequently, these feedback samples

determine a relevance ranking on the image collection, and new images

are presented to the user for the next round of feedback. In contrast,

we propose to integrate feedback selection with a visualization

mechanism that allows us to quickly explore the local feature space

surrounding an example image. The interaction provides a better sense

of the local structure of the database, and allows us to center on

examples that best capture the qualities desired by the user. This

approach, which we call deep exploration, entails the following

process. At the start of an exploration interaction only the selected

image is displayed. Then, by adjusting the exploration front, more and

more of its nearest neighbors are shown, see Figure 2.

Figure 2. Adjusting the exploration front.

The actual deep exploration occurs when the user encounters an image of interest in the exploration range, and decides to transfer the focus to this image to continue the exploration with the new image, see Figure 3. This provides the user the opportunity to easily reach other areas in feature space. This can be done as many times as the user wants, jumping from one area in feature space to another.

Figure 3. Deep exploration.

This technique is particularly useful when the search seems to be 'stuck' and cannot improve with the current collection of relevant images. Also, using deep exploration to move from an isolated relevant image to a more densely populated relevant area has direct benefits for the feedback analysis, allowing our feature selection and weighting approach to perform better with the additional data.

Relevance feedback

When the user decides to treat a centered image as a positive or

negative example, then all images within the exploration front will

also be treated as such. The retrieval system collects all feedback and

performs relevance feedback analysis to determine the most relevant

images, which are the ones that best match the user's interests, and

most informative images, which are the ones that allow the user to

easily continue exploring the feature space.

Feature selection and weighting

The collection of explored feedback images provides us with a

convenient setup for local feature selection. In particular, it allows

us to take into account prior feature density by giving higher weight

to feature regions where images cluster unexpectedly. This is desirable

given that for features to which the user is indifferent, clustering

will naturally occur at the feature regions of high prior density. In

our method, the influence of the latter kind of features is suppressed.

Our approach is to estimate the prior feature value density

corresponding to the local clustering of examples at the image under

study and set the distance function weights accordingly. To illustrate,

in the left figure of Figure 4 we can see an image of a sunset that is

explored with default feature weights. After several iterations of

feedback the feature weights have changed and when the image is

re-explored, as is shown in the right figure, its nearest neighbors are

more relevant than they were before.

Figure 4. Automatic feature weighting results in more relevant nearest neighbors.

Publications

For more information and experimental results, take a look at the

MM2009 paper in the publications

section. Earlier work was published and presented at BNAIC2009 and

ISPA2009.